VASS

Semi-Supervised Algorithm Visualizer

A little bit of theory...

Semi-supervised learning is the branch of machine learning that

refers to the simultaneous use of data both labeled and

unlabeled to perform learning tasks. It sits between supervised

and unsupervised learning.

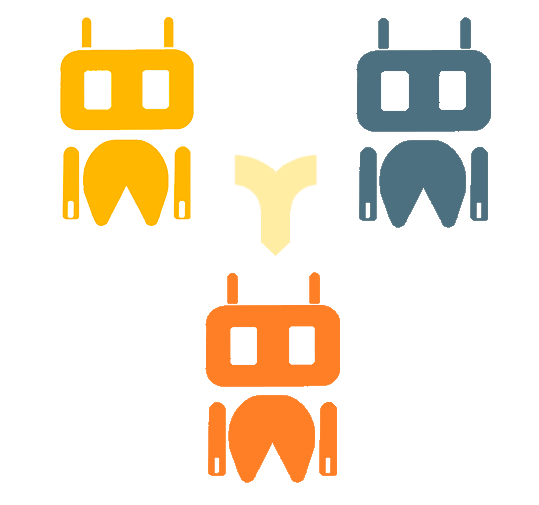

Among all semi-supervised algorithms, the core of this project is based on inductive methods. Their idea is very simple: they try to create a classifier that predicts labels for new data. The proposed algorithms have this objective, albeit with a bit more specificity: wrapper methods. The well-known wrapper methods are based on pseudo-labeling, which is the process where classifiers trained with labeled data generate labels for the unlabeled ones. Once this process is completed, the classifier is retrained, incorporating these new labels.

Among all semi-supervised algorithms, the core of this project is based on inductive methods. Their idea is very simple: they try to create a classifier that predicts labels for new data. The proposed algorithms have this objective, albeit with a bit more specificity: wrapper methods. The well-known wrapper methods are based on pseudo-labeling, which is the process where classifiers trained with labeled data generate labels for the unlabeled ones. Once this process is completed, the classifier is retrained, incorporating these new labels.

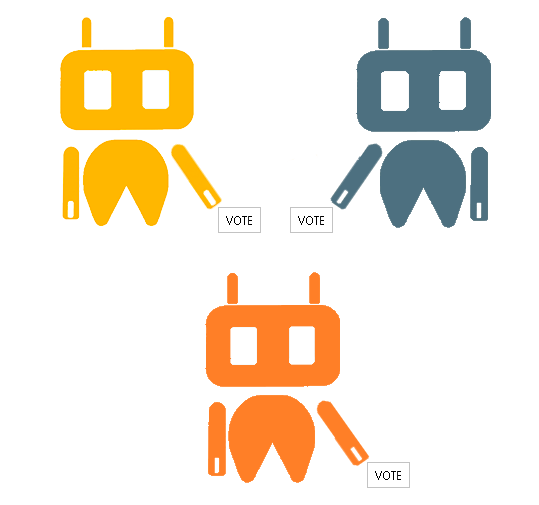

In the following cards, four of the most representative algorithms of

semi-supervised learning are presented: Self-Training,

Co-Training, Tri-Training and

Democratic Co-Learning.

Internally, each algorithm utilizes one or several classifiers (wrapper methods). Additionally, the number of views on the data is distinguished (a view being the subset of attributes of the dataset that the algorithm uses to learn the model). Unlike Single-view, a Multi-view algorithm views the dataset attributes as multiple subsets. For example, in the Co-Training algorithm, the first classifier might only "see" half of the attributes while the second one sees the other half. Each of them will work with its specific subset of attributes.

Internally, each algorithm utilizes one or several classifiers (wrapper methods). Additionally, the number of views on the data is distinguished (a view being the subset of attributes of the dataset that the algorithm uses to learn the model). Unlike Single-view, a Multi-view algorithm views the dataset attributes as multiple subsets. For example, in the Co-Training algorithm, the first classifier might only "see" half of the attributes while the second one sees the other half. Each of them will work with its specific subset of attributes.

Objective

The aim of this tool is to facilitate, through visualizations, the understanding

of how the main semi-supervised algorithms actually work when combined with theoretical

concepts.

By selecting any of the algorithms, you will be redirected to load the dataset. Subsequently, you can configure the algorithm with the desired parameters and finally obtain a visualization of the training process.

By selecting any of the algorithms, you will be redirected to load the dataset. Subsequently, you can configure the algorithm with the desired parameters and finally obtain a visualization of the training process.